SoniaSandbox: Difference between revisions

| Line 2: | Line 2: | ||

==quick comment on upPass== | ==quick comment on upPass== | ||

[[Image:UpPass_subtree.jpg|150px|right]] | |||

*not a popular algorithm | *not a popular algorithm | ||

:(you won't be tested on it) | :(you won't be tested on it) | ||

*but here's the correct way to do it: | *but here's the correct way to do it: | ||

:(from [http://www.scs.fsu.edu/~beerli/BSC-5936/08-31-05/Lecture_Notes_2.pdf Peter Beerli's website]) | :(from [http://www.scs.fsu.edu/~beerli/BSC-5936/08-31-05/Lecture_Notes_2.pdf Peter Beerli's website]) | ||

F<sub>x</sub>: the upPass set we want to get to <br> | F<sub>x</sub>: the upPass set we want to get to <br> | ||

S<sub>x</sub>: the downpass set we got to <br> | S<sub>x</sub>: the downpass set we got to <br> | ||

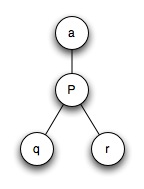

ancestor = a <br> | |||

parent = p, node we're looking at<br> | |||

children = q,r | |||

[[Image:UpPass.jpg|250px]] | [[Image:UpPass.jpg|250px]] | ||

<br><br> | |||

==Revisit overall strategy== | ==Revisit overall strategy== | ||

| Line 37: | Line 37: | ||

*#A best fit line thru data is a ML estimator. | *#A best fit line thru data is a ML estimator. | ||

[[Image:ML_plot.jpg]] | [[Image:ML_plot.jpg|150px]] | ||

==Probability Refresher== | ==Probability Refresher== | ||

| Line 44: | Line 44: | ||

<blockquote><tt> | <blockquote><tt> | ||

p(A)= | p(A)= 0.3 | ||

p(B)= | <br> | ||

p(A,B)= | p(B)= 0.3 | ||

p(A|B)= | <br> | ||

p(A,B)= 0.1 | |||

<br> | |||

p(A|B)= 0.1 / (0.1+0.2) = 1/3 = p(A,B) / p(B) | |||

<br> | |||

p(B|A) = 0.1 / (0.1+0.2) = p(A,B) / p(A) | |||

<br> | |||

Bayes' Rule: | |||

<br> | |||

p(A|B) = p(B|A) * p(A) / p(B) | |||

<br> | |||

</tt></blockquote> | </tt></blockquote> | ||

| Line 55: | Line 63: | ||

==ML in trees== | ==ML in trees== | ||

What is the best tree T given the data | *We are looking for the best tree, given some data. What is the best tree T given the data D? | ||

p(T|D) is what we want to maximize | :p(T|D) is what we want to maximize | ||

:Not intuitively obvious how we want to do that... use Bayes Law to rearrange into something we can intuitively understand | |||

<blockquote><tt> | |||

p(T|D) = p(D|T) * p(T) / p(D) | |||

</tt></blockquote> | |||

p(D) is a constant ... don't have to worry about it | *p(D) is a constant ... we don't have to worry about it | ||

* | *What is p(T), the a priori probability of the tree ? | ||

:Well, without looking at the data, do we have a way of saying any tree is more likely than another one if they don't have any data associated with them ? | |||

:No... not really | |||

*So what we're left maximizing is just p(D|T) and that sounds like a familiar concept! | |||

[[Image:Topology_distance.jpg|150px|right]] | |||

'''NOTE:''' Tree now consists of topology AND distances | |||

<blockquote><tt> | |||

p(D|T) = p(x->A|d_1) * p(x->y|d_2) * p(y->G|d_3) * p(y->G|d_4) | p(D|T) = p(x->A|d_1) * p(x->y|d_2) * p(y->G|d_3) * p(y->G|d_4) | ||

<br> | |||

p(A U B) = p(A) + p(B) - p(A,B) | p(A U B) = p(A) + p(B) - p(A,B) | ||

p(A | <br> | ||

p(A <intersect> B) = p(A)*p(B) | |||

</tt></blockquote> | |||

these | *We treat all of these mutations along the different branches as independent events (that's why you multiply the probabilities, because both things have to happen independently. | ||

==Jukes-Cantor== | ==Jukes-Cantor== | ||

Revision as of 15:27, 2 October 2006

Temp notes for Lecture 7

quick comment on upPass

- not a popular algorithm

- (you won't be tested on it)

- but here's the correct way to do it:

- (from Peter Beerli's website)

Fx: the upPass set we want to get to

Sx: the downpass set we got to

ancestor = a

parent = p, node we're looking at

children = q,r

Revisit overall strategy

for all possible trees:

- compute score (tree)

return best tree

Scoring functions

- max parsimony (fewest mutations)

- generalized parsimony (Sankoff: weighted mutation costs)

- Maximum Likelihood

ML intro

- examples of a ML estimator:

- for normally distributed random var X, X(bar), the mean of the data you observe, is a ML estimator of the mean of the distribution they were drawn from

- A best fit line thru data is a ML estimator.

Probability Refresher

total area of a box = 1

p(A)= 0.3

p(B)= 0.3

p(A,B)= 0.1

p(A|B)= 0.1 / (0.1+0.2) = 1/3 = p(A,B) / p(B)

p(B|A) = 0.1 / (0.1+0.2) = p(A,B) / p(A)

Bayes' Rule:

p(A|B) = p(B|A) * p(A) / p(B)

ML in trees

- We are looking for the best tree, given some data. What is the best tree T given the data D?

- p(T|D) is what we want to maximize

- Not intuitively obvious how we want to do that... use Bayes Law to rearrange into something we can intuitively understand

p(T|D) = p(D|T) * p(T) / p(D)

- p(D) is a constant ... we don't have to worry about it

- What is p(T), the a priori probability of the tree ?

- Well, without looking at the data, do we have a way of saying any tree is more likely than another one if they don't have any data associated with them ?

- No... not really

- So what we're left maximizing is just p(D|T) and that sounds like a familiar concept!

NOTE: Tree now consists of topology AND distances

p(D|T) = p(x->A|d_1) * p(x->y|d_2) * p(y->G|d_3) * p(y->G|d_4)

p(A U B) = p(A) + p(B) - p(A,B)

p(A <intersect> B) = p(A)*p(B)

- We treat all of these mutations along the different branches as independent events (that's why you multiply the probabilities, because both things have to happen independently.

Jukes-Cantor

cost matrix

if x == y : [JC eqn] if x != y : [JC eqn]

equations draw the little graph thing

Evolutionary Model

gives up likelihood of D|T (need branch lengths)

downPass for ML

compute L(p|q,r,d)

q , r = likelihood of the two subtrees and the distance to them

Temp notes for Lecture 6

Exams and HWs

- In-class midterm on 10/11

- studying for the exam:

- don't memorize lecture notes,

- more important to be able to work through the problems

- understand all the homeworks and you'll be prepared

Homeworks coming up

- HW5: Due next wednesday

- downPass, maximum likelihood

- HW6:

- search tree space

Up Pass

- If we know what the best answer is at the root- all of out other internal nodes aren't necessarily the best guess. We need an upPass algorithm that passes information from the root, back up to the leaves.

- For this example, we are dealing with one column of the sequence alignment

def upPass(tree,parent):

- if tree is a leaf: #(stop)

- return

- i123 = parent <intersect> left <intersect> right

- i12 = left <intersect> right

- i23 = parent <intersect> left

- i13 = parent <intersect> right

- if i123 != None:

- tree['data'] = i123

- else: #possible to simplify if empty set doesn't add with +=

- if i12 != None:

- data += i12

- if i13 != None:

- data += i13

- if i23 != None:

- data += i23

- if data == None:

- data = parent + left + right # union here

- tree['data']= data

- We are doing this for the most general case, where we considered:

- what should we do when there is no intersect ?

- When you start upPass that can't be the case because every parent should have an interset with its children if you've done downPass correctly....BUT this situation does arise as you traverse up the tree because you eliminate possibilities at a parent before you test for intersect with the children.