SoniaSandbox: Difference between revisions

| Line 47: | Line 47: | ||

:For q in ACGT: | :For q in ACGT: | ||

::For r in ACGT: | ::For r in ACGT: | ||

:::<math>P = P(q) * P(r) * P(q|p, | :::<math>P = P(q) * P(r) * P(q|p,t_{pq}) * P(r|p,t_{pr})</math> | ||

:::L[p] +=P | :::L[p] +=P | ||

</tt></blockquote> | </tt></blockquote> | ||

Revision as of 09:15, 5 October 2006

Temp notes for Lecture 8

Probability of a tree

- How do we get from what we know (score of data given a tree) to what we want (score of the tree, given the data)?

- [math]\displaystyle{ P(T|D) \rightarrow P(D|T) }[/math]

- From last time, one of the assumptions that we made is that without knowing anything about the data, two trees (T1 and T2) are equally likely.

- [math]\displaystyle{ \frac{P(T_1|D)}{P(T_2|D)} = \frac{P(D|T_1)P(T_1)}{P(D|T_2)P(T_2)} }[/math]

- Note: when we propose a tree, we'll also find the branch lengths that work best for that tree. We'll treat that as part of the topology.

- Expression for the joint probability of all data:

- [math]\displaystyle{ P(D|T) = P(A,C,C,C,G,x,y,z,w|T) }[/math]

- T = topology of the tree (includes t1,t2... all the branch lengths)

- We're going to refer to x,y,z,w as "nuisance parameters"

- We don't care what their values ARE, we're just going to try ALL of them, and just integrate through.

- Join probability of the data can be separated into each of the independent events (mutations), and the probability of each event is exactly what the J-C formula gives us the way to calculate!

- [math]\displaystyle{ P(A,C,C,C,G,x,y,z,w|T) = P_x*P_y*P_z*P_w }[/math]

- [math]\displaystyle{ P(x) = P_x }[/math]

- [math]\displaystyle{ P(y|x, t_6)*P(A|y, t_1)*P(C|y, t_2) = P_y }[/math]

- [math]\displaystyle{ P(z|x, t_8)*P(C|z, t_3) = P_z }[/math]

- [math]\displaystyle{ P(w|z, t_7)*P(C|w, t_4)*P(G|w, t_5) = P_w }[/math]

- Now that we have an expression for the joint probability, we can sum over the nuisance paramaters. For each possible set of values for the nuisance parameters, the joint probability distribution will take on a particular value. We then ADD these because we don't care what the nuisance parameters' values are, they can be one set of values, OR another set of values, OR another set of values ... (each sum is over the 4 possibilites ACGT)

- [math]\displaystyle{ \sum_x\sum_y\sum_z\sum_w P_x*P_y*P_z*P_w }[/math]

Marginalizing

In order to calculate

- [math]\displaystyle{ \sum_x\sum_y\sum_z\sum_w P_x*P_y*P_z*P_w }[/math]

- Now consider that:

- P_x doesn't depend on y or z or w,

- P_y doesn't depend on z or w,

- P_z doesn't depend on w,

- but P_w depends on everything.

- You can pull terms in front of the sum if they don't depend on the variable you're summing over.

- [math]\displaystyle{ \sum_x P_x\left(\sum_y P_y\left(\sum_z P_z\left(\sum_w P_w\right)\right)\right) }[/math]

- When you're considering the likelihood at a partcular node ([math]\displaystyle{ P_x }[/math] for example) you have to look at 64 possibilities (4 at parent, 4 at each child)

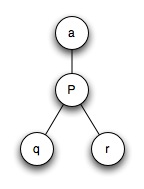

For p in ACGT:

- For q in ACGT:

- For r in ACGT:

- [math]\displaystyle{ P = P(q) * P(r) * P(q|p,t_{pq}) * P(r|p,t_{pr}) }[/math]

- L[p] +=P

- Note:

- P is probability

- p is the state of the parent node (ACGT)

Designing an Algorithm

Remember Fibonacci!

remember Fib:

- return kth number

- return series

- the series was the smart way to do it- the other one explodes computationally

Efficient computation

In the Sankoff algorithm, we could have done this by simply calling this whole function again (64 times)- but we want to do something smarter...

Want to define a function outside all of that, want it to return the probabilities P(q) for all q in ACGT P(r) for all r in ACGT

def ml(tree,pos):

- (insert code here)

- return {'A':0.1,'C':0.2,'G':0.1,'T':0.1} #values are just as an example!

- This is the trick we really want to see you implement in HW5; this is where dynamic programming comes in.

- We're not going to compute these probabilities 64 times.

- Recall how we pulled terms out of the sum.... we can take advantage of that to break up the problem in an intelligent way:

- Separate computation of P_w which doesn't depend on x,y,z

- Separate computation of P_z which doesn't depend on x,y

- ...etc...

Greedy Algorithm for trying trees

- A heuristic (contrast with dynamic programming)

- We give up and say we're not going to find an exact solution, but we're going to find an okay solution

Calculate P(D|T)

- For some trees:

- compute L

- report best L

- build 3-leaf tree

- add a leaf

- try all possibilities

- keep the best one

- repeat until all leaves added

- change leaf order and repeat

Branch Lengths

- What are we going to do about branch lengths?

- There's no proof of this, but what we're going to do is look at one branch length at a time

keeping all other branch lengths equal, let's find the length of t_o that maximizes the tree.

- Usually you get something that looks like:

parabola with big right shoulder (y-axis = L, x-axis = t_o)

- Branch lengths not very difficult to optimize.

- So it is a heuristic solution, but its pretty darn good.

Temp notes for Lecture 7

quick comment on upPass

- not a popular algorithm

- (you won't be tested on it)

- but here's the correct way to do it:

- (from Peter Beerli's website)

definitions

- Fx: the upPass set we want to get to

- Sx: the downpass set we got to

- ancestor = a

- parent = p, node we're looking at

- children = q,r

Revisit overall strategy

- Although up until now we've always started with a tree of known topology, a lot of times you wouldn't know the tree topology beforehand

for all possible trees:

- compute score (tree)

return best tree

Scoring functions

- max parsimony (fewest mutations)

- generalized parsimony (Sankoff: weighted mutation costs)

- Maximum Likelihood

ML intro

- examples of a ML estimator:

- for normally distributed random var X, X(bar), the mean of the data you observe, is a ML estimator of the mean of the distribution they were drawn from

- A best fit line thru data is a ML estimator.

Probability Refresher

total area of a box = 1

p(A)= 0.3 , p(B)= 0.3

p(A,B)= 0.1

p(A|B)= 0.1 / (0.1+0.2) = 1/3 = p(A,B) / p(B)

p(B|A) = 0.1 / (0.1+0.2) = p(A,B) / p(A)

With a little manipulation we can derive Bayes' Rule:

p(A|B) = p(B|A) * p(A) / p(B)

ML in trees

- We are looking for the best tree, given some data. What is the best tree T given the data D?

- p(T|D) is what we want to maximize

- Not obvious how we want to do that... use Bayes Law to rearrange into something we can intuitively understand

p(T|D) = p(D|T) * p(T) / p(D)

- p(D) is a constant ... we don't have to worry about it

- What is p(T), the a priori probability of the tree ?

- Well, without looking at the data, do we have a way of saying any tree is more likely than another one if they don't have any data associated with them ?

- No... not really

- So what we're left maximizing is just p(D|T) and that sounds like a familiar concept!

NOTE: Tree now consists of topology AND distances We ask, what is the probability of each mutation occuring along a branch of a certain length? What is the probability that they ALL occurred, to give us the sequences we see today?

p(D|T) = p(x->A|d_1) * p(x->y|d_2) * p(y->G|d_3) * p(y->G|d_4)

p(A U B) = p(A) + p(B) - p(A,B)

p(A <intersect> B) = p(A)*p(B)

- We treat all of these mutations along the different branches as independent events (that's why you multiply the probabilities, because all the events have to happen independently.)

Jukes-Cantor

- based on a simple cost "matrix"

- probability of changing from one particular nucleotide to another particular nucleotide is 'a'

- probability of any nucleotide staying the same is '1-3a'

if x == y :

- [JC eqn you'll derive in the hw]

if x != y :

- [JC eqn you'll derive in the hw]

Evolutionary Model

gives us likelihood of (D|T) (need branch lengths)

downPass for ML

- compute L(p|q,r,d)

q , r = likelihood of the two subtrees, d is the distance to them (?)

Temp notes for Lecture 6

Exams and HWs

- In-class midterm on 10/11

- studying for the exam:

- don't memorize lecture notes,

- more important to be able to work through the problems

- understand all the homeworks and you'll be prepared

Homeworks coming up

- HW5: Due next wednesday

- downPass, maximum likelihood

- HW6:

- search tree space

Up Pass

- If we know what the best answer is at the root- all of out other internal nodes aren't necessarily the best guess. We need an upPass algorithm that passes information from the root, back up to the leaves.

- For this example, we are dealing with one column of the sequence alignment

def upPass(tree,parent):

- if tree is a leaf: #(stop)

- return

- i123 = parent <intersect> left <intersect> right

- i12 = left <intersect> right

- i23 = parent <intersect> left

- i13 = parent <intersect> right

- if i123 != None:

- tree['data'] = i123

- else: #possible to simplify if empty set doesn't add with +=

- if i12 != None:

- data += i12

- if i13 != None:

- data += i13

- if i23 != None:

- data += i23

- if data == None:

- data = parent + left + right # union here

- tree['data']= data

- We are doing this for the most general case, where we considered:

- what should we do when there is no intersect ?

- When you start upPass that can't be the case because every parent should have an interset with its children if you've done downPass correctly....BUT this situation does arise as you traverse up the tree because you eliminate possibilities at a parent before you test for intersect with the children.